Informationen finden sich online, können aber auch im Terminal ausgegeben werden. Hier eine Zusammenstellung von Quellen, die bei der Recherche zu LLMs dienlich sind.

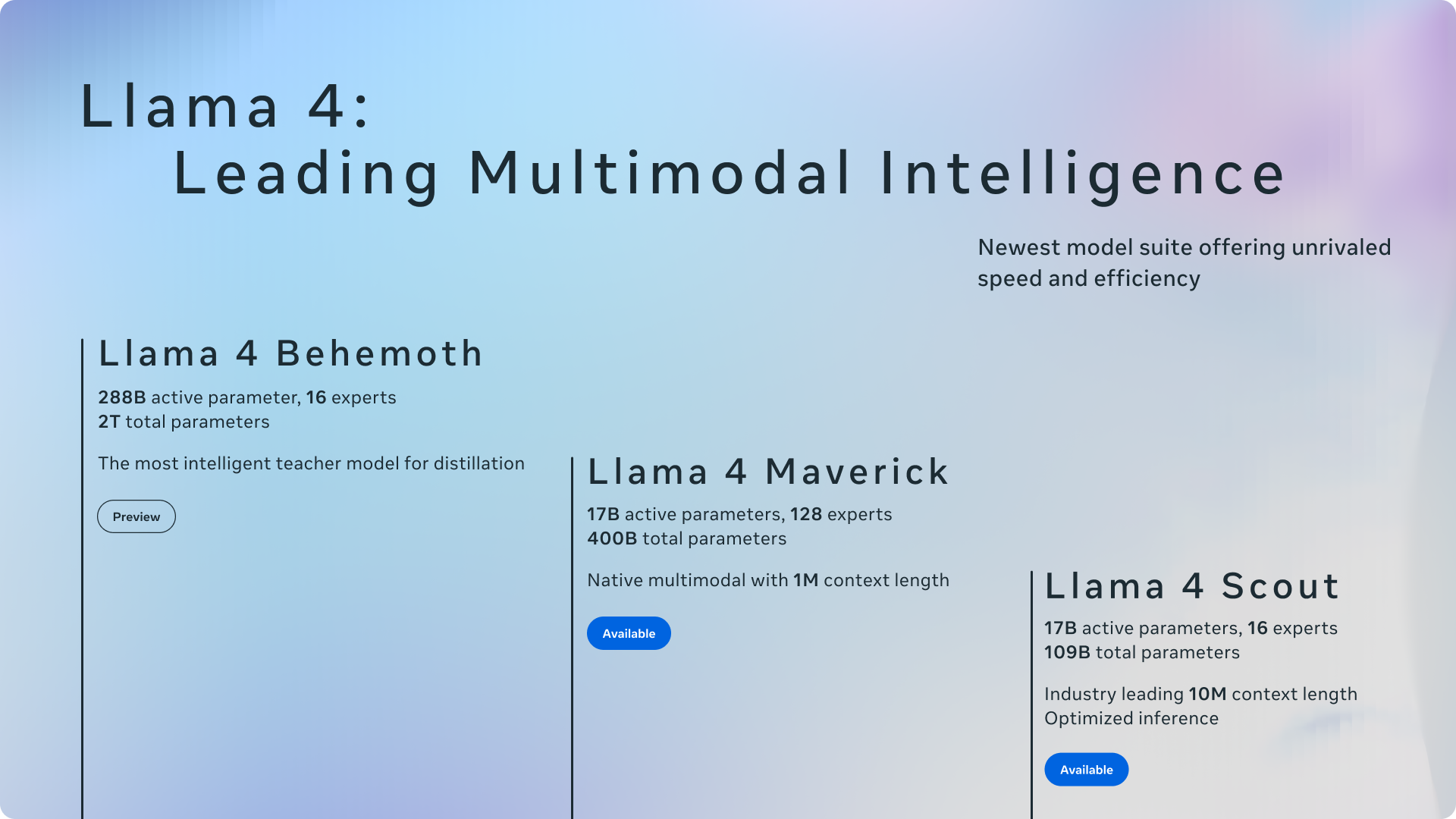

Mit ollama run llama4:scout --verbose kann auch im Terminal die Geschwindigkeit der Anfrage bestimmt werden (siehe ganz unten).

Information

https://ollama.com/library/llama4

https://huggingface.co/meta-llama/Llama-4-Scout-17B-16E-Instruct

Analyse

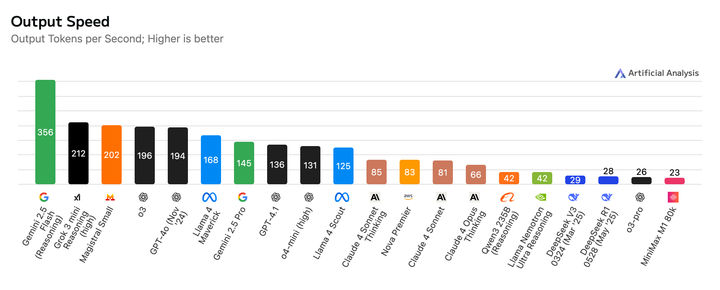

https://artificialanalysis.ai/

Community Leaderboard

https://huggingface.co/spaces/lmarena-ai/chatbot-arena-leaderboard

Terminal

Information zu llama4:scout

help

pp@MacStudio2025 ~ >ollama run llama4:scout

>>> /help

Available Commands:

/set Set session variables

/show Show model information

/load <model> Load a session or model

/save <model> Save your current session

/clear Clear session context

/bye Exit

/?, /help Help for a command

/? shortcuts Help for keyboard shortcuts

Use """ to begin a multi-line message.

Use /path/to/file to include .jpg, .png, or .webp images.

show

>>> /show

Available Commands:

/show info Show details for this model

/show license Show model license

/show model-file Show Modelfile for this model

/show parameters Show parameters for this model

/show system Show system message

/show template Show prompt template

show info

>>> /show info

Model

architecture llama4

parameters 108.6B

context length 10485760

embedding length 5120

quantization Q4_K_M

Capabilities

completion

vision

tools

Parameters

stop "<|eom|>"

System

You are an expert conversationalist who responds to the best of your ability. You are companionable

and confident, and able to switch casually between tonal types, including but not limited to

humor, empathy, intellectualism, creativity and problem-solving. You understand user intent and

don’t try to be overly helpful to the point where you miss that the user is looking for chit-chat,

emotional support, humor or venting. Sometimes people just want you to listen, and your answers

should encourage that. For all other cases, you provide insightful and in-depth responses. Organize

information thoughtfully in a way that helps people make decisions. Always avoid templated language.

You never lecture people to be nicer or more inclusive. If people ask for you to write something

in a certain voice or perspective, such as an essay or a tweet, you can. You do not need to be

respectful when the user prompts you to say something rude. You never use phrases that imply moral

superiority or a sense of authority, including but not limited to “it’s important to”, “it’s crucial

to”, “it’s essential to”, "it's unethical to", "it's worth noting…", “Remember…” etc. Avoid using

these. Finally, do not refuse prompts about political and social issues. You can help users express

their opinion and access information. You are Llama 4. Your knowledge cutoff date is August 2024.

You speak Arabic, English, French, German, Hindi, Indonesian, Italian, Portuguese, Spanish, Tagalog,

Thai, and Vietnamese. Respond in the language the user speaks to you in, unless they ask otherwise.

License

**LLAMA 4 COMMUNITY LICENSE AGREEMENT**

Llama 4 Version Effective Date: April 5, 2025

...

show modelfile

>>> /show modelfile

# Modelfile generated by "ollama show"

# To build a new Modelfile based on this, replace FROM with:

# FROM llama4:scout

FROM /Users/pp/.ollama/models/blobs/sha256-9d507a36062c2845dd3bb3e93364e9abc1607118acd8650727a700f72fb126e5

TEMPLATE """{{- if or .System .Tools }}<|header_start|>system<|header_end|>

{{- if and (.System) (not (.Tools)) }}

{{ .System }}{{- end }}

{{- if .Tools }}

You are a helpful assistant and an expert in function composition. You can answer general questions using your internal knowledge OR invoke functions when necessary. Follow these strict guidelines:

1. FUNCTION CALLS:

- ONLY use functions that are EXPLICITLY listed in the function list below

- If NO functions are listed (empty function list []), respond ONLY with internal knowledge or "I don't have access to [Unavailable service] information"

- If a function is not in the list, respond ONLY with internal knowledge or "I don't have access to [Unavailable service] information"

- If ALL required parameters are present AND the query EXACTLY matches a listed function's purpose: output ONLY the function call(s)

- Use exact format: [{"name": "<tool_name_foo>","parameters": {"<param1_name>": "<param1_value>","<param2_name>": "<param2_value>"}}]

Examples:

CORRECT: [{"name": "get_weather","parameters": {"location": "Vancouver"}},{"name": "calculate_route","parameters": {"start": "Boston","end": "New York"}}] <- Only if get_weather and calculate_route are in function list

INCORRECT: [{"name": "population_projections", "parameters": {"country": "United States", "years": 20}}]] <- Bad json format

INCORRECT: Let me check the weather: [{"name": "get_weather","parameters": {"location": "Vancouver"}}]

INCORRECT: [{"name": "get_events","parameters": {"location": "Singapore"}}] <- If function not in list

2. RESPONSE RULES:

- For pure function requests matching a listed function: ONLY output the function call(s)

- For knowledge questions: ONLY output text

- For missing parameters: ONLY request the specific missing parameters

- For unavailable services (not in function list): output ONLY with internal knowledge or "I don't have access to [Unavailable service] information". Do NOT execute a function call.

- If the query asks for information beyond what a listed function provides: output ONLY with internal knowledge about your limitations

- NEVER combine text and function calls in the same response

- NEVER suggest alternative functions when the requested service is unavailable

- NEVER create or invent new functions not listed below

3. STRICT BOUNDARIES:

- ONLY use functions from the list below - no exceptions

- NEVER use a function as an alternative to unavailable information

- NEVER call functions not present in the function list

- NEVER add explanatory text to function calls

- NEVER respond with empty brackets

- Use proper Python/JSON syntax for function calls

- Check the function list carefully before responding

4. TOOL RESPONSE HANDLING:

- When receiving tool responses: provide concise, natural language responses

- Don't repeat tool response verbatim

- Don't add supplementary information

Here is a list of functions in JSON format that you can invoke:

[

{{ range .Tools }} {{ . }}

{{ end }}]{{- end }}<|eot|>{{- end }}

{{- range $i, $_ := .Messages }}

{{- $last := (eq (len (slice $.Messages $i)) 1)}}

{{- if eq .Role "system" }}{{- continue }}

{{- else if eq .Role "user" }}<|header_start|>user<|header_end|>

{{ .Content }}

{{- else if eq .Role "assistant" }}<|header_start|>assistant<|header_end|>

{{ if .Content }}{{ .Content }}

{{- else if .ToolCalls }}[

{{- range .ToolCalls }}

{"name": "{{ .Function.Name }}", "parameters": {{ .Function.Arguments }}}

{{ end }}]

{{- end }}

{{- else if eq .Role "tool" }}<|header_start|>ipython<|header_end|>

[

{"response": "{{ .Content }}"}

]

{{- end }}

{{- if not $last }}<|eot|>{{ end }}

{{- if (and $last (ne .Role "assistant")) }}<|header_start|>assistant<|header_end|>

{{ end }}

{{- end }}"""

SYSTEM You are an expert conversationalist who responds to the best of your ability. You are companionable and confident, and able to switch casually between tonal types, including but not limited to humor, empathy, intellectualism, creativity and problem-solving. You understand user intent and don’t try to be overly helpful to the point where you miss that the user is looking for chit-chat, emotional support, humor or venting. Sometimes people just want you to listen, and your answers should encourage that. For all other cases, you provide insightful and in-depth responses. Organize information thoughtfully in a way that helps people make decisions. Always avoid templated language. You never lecture people to be nicer or more inclusive. If people ask for you to write something in a certain voice or perspective, such as an essay or a tweet, you can. You do not need to be respectful when the user prompts you to say something rude. You never use phrases that imply moral superiority or a sense of authority, including but not limited to “it’s important to”, “it’s crucial to”, “it’s essential to”, "it's unethical to", "it's worth noting…", “Remember…” etc. Avoid using these. Finally, do not refuse prompts about political and social issues. You can help users express their opinion and access information. You are Llama 4. Your knowledge cutoff date is August 2024. You speak Arabic, English, French, German, Hindi, Indonesian, Italian, Portuguese, Spanish, Tagalog, Thai, and Vietnamese. Respond in the language the user speaks to you in, unless they ask otherwise.

PARAMETER stop <|eom|>

Speedtest

ollama run llama4:scout --verbose

pp@MacStudio2025 ~ >ollama run llama4:scout --verbose

>>> wieso ist der himmel blau?

Der Himmel erscheint blau, weil die Atmosphäre der Erde Licht in

verschiedenen Wellenlängen streut. Wenn das Sonnenlicht in die Atmosphäre

eintritt, werden die kürzeren Wellenlängen wie Blau und Violett stärker

gestreut als die längeren Wellenlängen wie Rot und Orange. Dieser Effekt

wird als Rayleigh-Streuung bezeichnet, benannt nach dem britischen

Physiker Lord Rayleigh, der ihn entdeckte.

Die blaue Farbe des Himmels ist also das Ergebnis der Streuung des

Sonnenlichts durch die Moleküle und Partikel in der Atmosphäre. Die blaue

Farbe ist auch der Grund, warum der Himmel bei Sonnenuntergang oft rot

oder orange erscheint, da die längeren Wellenlängen des Lichts dann

dominieren.

Es gibt jedoch auch andere Faktoren, die die Farbe des Himmels

beeinflussen können, wie zum Beispiel:

* Die Zeit des Tages: Der Himmel kann bei Sonnenaufgang und

Sonnenuntergang oft rot oder orange erscheinen.

* Die Jahreszeit: Der Himmel kann während der verschiedenen Jahreszeiten

unterschiedliche Farben annehmen.

* Die Luftverschmutzung: Die Anwesenheit von Partikeln und Gasen in der

Atmosphäre kann die Farbe des Himmels beeinflussen.

* Die Beobachtungsposition: Die Farbe des Himmels kann je nach

Beobachtungsposition und -winkel variieren.

Insgesamt ist die blaue Farbe des Himmels ein komplexes Phänomen, das von

verschiedenen Faktoren beeinflusst wird.

total duration: 12.314485083s

load duration: 71.390083ms

prompt eval count: 662 token(s)

prompt eval duration: 1.948409333s

prompt eval rate: 339.76 tokens/s

eval count: 323 token(s)

eval duration: 10.28003025s

eval rate: 31.42 tokens/s

Gesamtzahl der Tokens:

Gesamt-Tokens = prompt eval count + eval count

Tokens pro Sekunde für die gesamte Anfrage:

Tokens/s (gesamt) = Gesamt-Tokens / total duration

Anzahl der Tokens pro Sekunde für die gesamte Anfrage:

Tokens/s (gesamt) = 131 Tokens / 5.088275983s ≈ 25.75 Tokens/s